Webassembly on kubernetes: What you need to know

Like a couple of innovative technologies, different people have different views about the place where webassembly fits the technological scene.

Webassembly (also called WASM) is definitely the subject of a lot of noise at the present time. But what is this? Is he a Java Script killer? Is it a new web programming language? Is it (as we would like to say) the next wave of the cloud account? We have heard it called many things: the best EBPF, the alternative to RISC V, a competitor for Java (or Flash), which is a performance enhanced for browsers, an alternative to Docker.

How to think about webassembly

In this post, I will stay away from these discussions and focus only on how to use webassembly on kubernetes.

Critics and state of use

Unlike regular programming languages, you do not write Webassembly directly: Write a symbol that generates webassembly. Currently, Go and Rust are the main source languages. I know that Cutlin and Bathon are working towards this goal. There may be other languages that you are not aware of.

I settled on this publication because of my knowledge of the language. In particular, I will keep the same code through three different structures:

- A rust icon to regular rust such as the foundation line

- Rust to webassembly using guaranteed operation time

- Rust to webassembly using external operating time

Don’t worry; I will explain the difference between the last two years later.

The state of use should be more advanced than hello world to highlight the Webassembly capabilities. I have applied HTTP server that simulates one end point of the excellent API HTTPBIN test tool. The code itself is not necessary because the post is not related to rust, but if you are interested, you can find it on GitHub. I add a field to responding to an explicit re -approach, respectively, respectively nativeand embedOr runtime.

Foundation: regular rust to original

For normal original assembly, I use a multi -stage Docker file:

FROM rust:1.84-slim AS build #1

RUN <- Start from the latest picture of the rust

- HEREDOCS to win

- Install the necessary tools scheme for society

- Assembly

- I can use

FROM scratchBut after reading this, I prefer to use the distribution - Copy of implementation from the previous assembly stage

Final wasm-kubernetes:native The image weighs 8.71 meters, with its basic image distroless/static Take 6.03m of them.

Adapting to webassembly

The main idea behind webassembly is that it is safe because it cannot access the host system. However, we must open a socket to listen to the requests received to run the HTTP server. You cannot do it. We need operation time that provides this feature and other system dependents. It is the goal of the webassembly system interface.

Webassembly (WASI) interface is a set of standards of standards programming interface for programs collected according to W3C Webassembly (WASM) standards. WASI is designed to provide a safe standard interface for applications that can be assembled to WASM from any language, and may work anywhere – from browsers to clouds to embedded devices.

Introduction to WASI

The specifications of the following V0.2 system are determined:

- Watches

- random

- File system

- Scatter

- Cli

- http

Two of the operating times already implement the specifications:

- WASMTIME, developed by a bytecode coalition

- Brown

- Minister, based on going

- Wasmedge, designer of cloud, computing and artificial intelligence applications

- It revolves to work burdens without a servant

I had to choose without being an expert in any of these. She finally decided on Wasmedge because of her focus on the cloud.

We must object to the code connected to the use of system programming facades and to redirect them to the time of operation. Instead of intercepting the operating time, the RUST ecosystem provides a correction mechanism: we replace the code that requires system programming facades, a symbol, calling for WASI applications. We must know any dependency that requires system programming interface and hope for a correction of our dependency version.

[patch.crates-io]

tokio = { git = "https://github.com/second-state/wasi_tokio.git", branch = "v1.36.x" } #1-2

socket2 = { git = "https://github.com/second-state/socket2.git", branch = "v0.5.x" } #1

[dependencies]

tokio = { version = "1.36", features = ["rt", "macros", "net", "time", "io-util"] } #2

axum = "0.8"

serde = { version = "1.0.217", features = ["derive"] }

- revision

tokioandsocket2Fund with WASI calls - Delay

tokioCrate is 1.43, but the last (and only correction) is V1.36. We cannot use the latest version because there is no correction.

We must change Dockerfile to the online assembly icon instead of the mother:

FROM --platform=$BUILDPLATFORM rust:1.84-slim AS build

RUN <<3>

- Install WASM goal

- Translation into WASM

- We must activate

wasmedgeKnowledge, as well astokio_unstableOne, successfully assembly to webassembly

At this stage, we have two options for the second stage:

-

Use WasmedGE as a basic image:

FROM --platform=$BUILDPLATFORM wasmedge/slim-runtime:0.13.5 COPY --from=build /wasm/target/wasm32-wasip1/release/httpbin.wasm /httpbin.wasm CMD ["wasmedge", "--dir", ".:/", "/httpbin.wasm"]From the perspective of use, it is very similar to the original approach.

-

Copy the webassembly file and make it responsible for the operating time:

FROM scratch COPY --from=build /wasm/target/wasm32-wasip1/release/httpbin.wasm /httpbin.wasm ENTRYPOINT ["/httpbin.wasm"]It is the place where things become interesting.

the native The approach is a little better than embed One, however runtime It is the most heavy because it contains only one web file.

WASM image run on Docker

Not all Docker operating times are not equal, and to run WASM work burden, we need to go a little in the name of Docker. While Docker, the company, has created Docker as a product, the current reality is that the containers have evolved beyond Docker and now they answer the specifications.

the An open container initiative It is an open -to -purpose governance structure that creates open industry standards on container formats and operating times.

OCI was established in June 2015 by Docker and other leaders in the container industry, currently containing three specifications: operating time specifications (operating time specifications), image specifications (image) and distribution specifications (expanded distribution). Operating time specifications determine how to run the “file system package” that has been discharged on the disk. At a high level, the OCI app downloads OCI image and then dismantles this image in the OCI operating system package. At this stage, the OCI package package will run with OCI operating time.

Open container initiative

Since then, I have been using the appropriate terms for OCI photos and containers. Not all OCI operating times are not equal, and far from all of them can run WASM work burden: Orbstack, the current OCI operating time, cannot, but for the extent of Docker Desktop, as experimental feature. According to the documents, we must:

- Use

containerdTo withdraw and store pictures - Wasm empowerment

Finally, we can play the above OCI image that contains a WASM file by choosing WASM, WASMEDEGE, in my case. let’s do it:

docker run --rm -p3000:3000 --runtime=io.containerd.wasmedge.v1 ghcr.io/ajavageek/wasm-kubernetes:runtime

io.containerd.wasmedge.v1 It is the current version of WasmedGE. You must be authenticated with GitHub if you want to try it.

curl localhost:3000/get\?foo=bar | jq

The result is the same for the original version:

{

"flavor": "runtime",

"args": {

"foo": "bar"

},

"headers": {

"accept": "*/*",

"host": "localhost:3000",

"user-agent": "curl/8.7.1"

},

"url": "/get?foo=bar"

}

WASI on the Docker desktop allows you to rotate HTTP server that behaves like an ordinary original image! Better, the image size is small like the webassembly file that contains it:

Wasm photo run on kubernetes

Now the fun part comes: your favorite cloud (provider) does not use Dockker desktop. Nevertheless, we can still run webassembly work burdens on Kubernetes. For this, we need to understand a little about the non -low levels of what happens when a container is turned on, regardless of whether from the time of OCI or kubernetes.

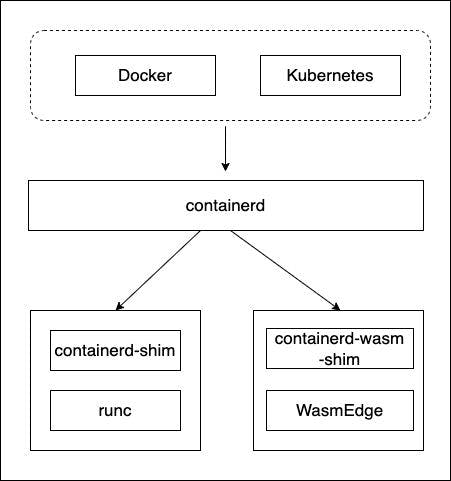

The latter carries out a process; In our case, it is containerd. yet, containerd It is nothing but other containers. It discovers the “flavor” of the container and is called the relevant implementation. For example, for “regular” containers, they call runc Via a Shout. The good thing is that we can install other fillings dedicated to other types of containers, such as WASM. The next clarification, taken from the Wasmedge website, summarizes the flow:

Despite some major cloud service providers who provide WASM integration, none of them provide such a low level. I will continue on my laptop, but Docker Desktop does not provide direct integration: it’s time to be creative. For example, Minikube is a complete distribution of kubernetes that creates the virtual Linux interc device within the Docker environment. We can SSH in VM and configure it on the content of our heart. Let’s start installing minikube.

brew install minikube

Now, we start minikube with containerd The driver and select a profile to enable VMS to be formed differently. We call this profile unimaginable wasm.

minikube start --driver=docker --container-runtime=containerd -p=wasm

Depending on whether you have already installed minikube And whether she has already downloaded her photos, it may take a few seconds to start to dozens of minutes. Be patient. Output should be something closer to:

[wasm] minikube v1.35.0 on Darwin 15.1.1 (arm64)

[wasm] minikube v1.35.0 on Darwin 15.1.1 (arm64)

Using the docker driver based on user configuration

Using the docker driver based on user configuration

Using Docker Desktop driver with root privileges

Using Docker Desktop driver with root privileges

Starting "wasm" primary control-plane node in "wasm" cluster

Starting "wasm" primary control-plane node in "wasm" cluster

Pulling base image v0.0.46 ...

Pulling base image v0.0.46 ...

minikube was unable to download gcr.io/k8s-minikube/kicbase:v0.0.46, but successfully downloaded docker.io/kicbase/stable:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 as a fallback image

minikube was unable to download gcr.io/k8s-minikube/kicbase:v0.0.46, but successfully downloaded docker.io/kicbase/stable:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 as a fallback image

Creating docker container (CPUs=2, Memory=12200MB) ...

Creating docker container (CPUs=2, Memory=12200MB) ...

Preparing Kubernetes v1.32.0 on containerd 1.7.24 ...

Preparing Kubernetes v1.32.0 on containerd 1.7.24 ...

Generating certificates and keys ...

Generating certificates and keys ...

Booting up control plane ...

Booting up control plane ...

Configuring RBAC rules ...

Configuring RBAC rules ...

Configuring CNI (Container Networking Interface) ...

Configuring CNI (Container Networking Interface) ...

Verifying Kubernetes components...

Verifying Kubernetes components...

Using image gcr.io/k8s-minikube/storage-provisioner:v5

Using image gcr.io/k8s-minikube/storage-provisioner:v5

Enabled addons: storage-provisioner, default-storageclass

Enabled addons: storage-provisioner, default-storageclass

Done! kubectl is now configured to use "wasm" cluster and "default" namespace by default

Done! kubectl is now configured to use "wasm" cluster and "default" namespace by default

At this stage, our goal is to install on the basic VM:

- WASMEDE to run WASM work burden

- Shim to dry between

containerdandwasmedge

minikube ssh -p wasm

We can install Wasmedge, but I haven’t found a place to download Shim. In the next step, we will build both. We first need to install rust:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

The text program is likely to complain that the duo that has been downloaded cannot be implemented:

Cannot execute /tmp/tmp.NXPz8utAQx/rustup-init (likely because of mounting /tmp as noexec).

Please copy the file to a location where you can execute binaries and run ./rustup-init.

Follow the instructions:

cp /tmp/tmp.NXPz8utAQx/rustup-init .

./rustup-init

Follow the default installation by clicking on ENTER button. Upon completion, the current coincidence.

. "$HOME/.cargo/env"

The system is ready for construction, and to seek and explain.

sudo apt-get update

sudo apt-get install -y git

git clone https://github.com/containerd/runwasi.git

cd runwasi

./scripts/setup-linux.sh

make build-wasmedge

INSTALL="sudo install" LN="sudo ln -sf" make install-wasmedge

The last step requires formation containerd The process with Shim. Enter the next excerpt in [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] Divide /etc/containerd/config.toml file:

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.wasmedgev1]

runtime_type = "io.containerd.wasmedge.v1"

Restart containerd To download the new configuration.

sudo systemctl restart containerd

Our system is finally ready to accept the burden of webassembly work. Users can publish AsMedge pod With the following statement:

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: wasmedge #1

handler: wasmedgev1 #2

---

apiVersion: v1

kind: Pod

metadata:

name: runtime

labels:

arch: runtime

spec:

containers:

- name: runtime

image: ghcr.io/ajavageek/wasm-kubernetes:runtime

runtimeClassName: wasmedge #3

- Wasmedge work burden should be used this name

- Treatment for use. The last part of the added section should be in the TOML file, anyand

containerd.runtimes.wasmedgev2 - Reach to the name of the operating time category that we set above directly

I used one Pod Instead of full Deployment To keep things simple.

Note many levels of non -guidance:

- the

podIt indicateswasmedgeThe name of the operating time category - the

wasmedgeThe operating time category indicates towasmedgev1Treated - the

wasmedgev1The processor in the Toml file is determinedio.containerd.wasmedge.v1Operating time type

Final steps

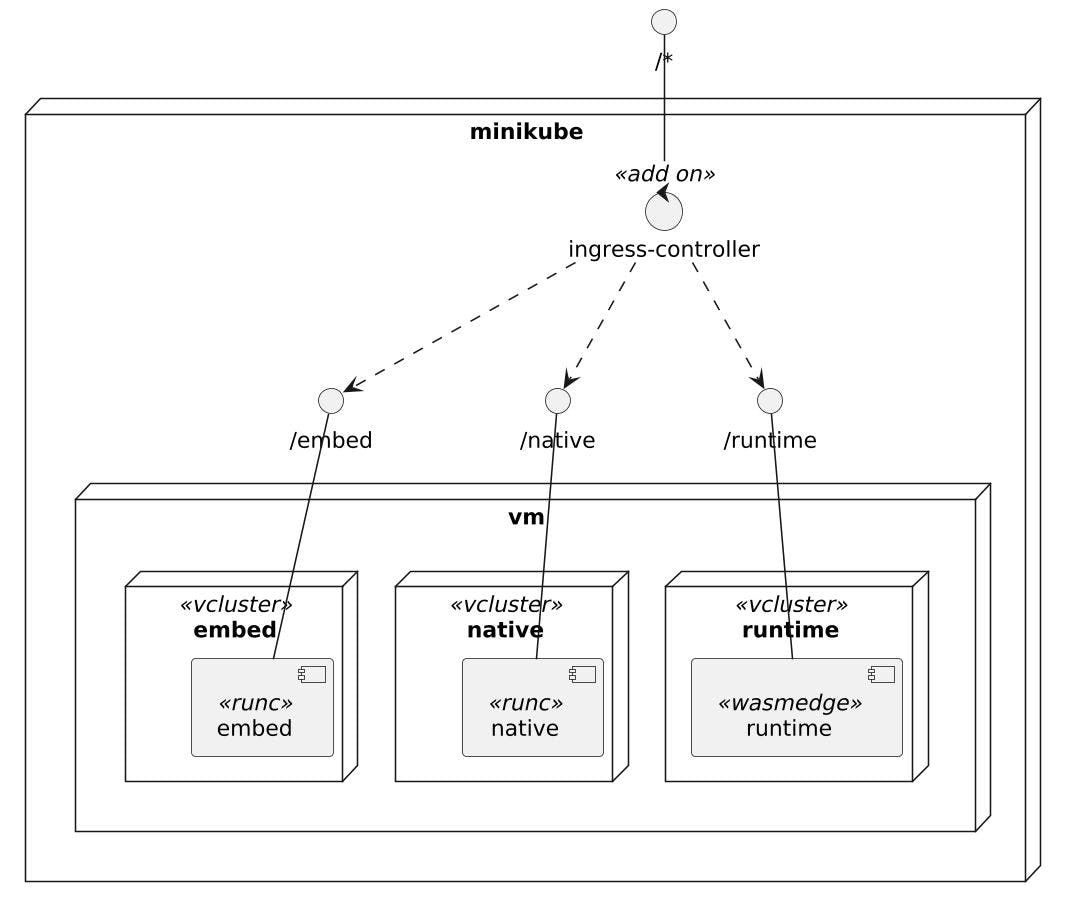

To compare methods and test our work, we can use minikube ingress Addon and Vcluster. The former provides one access point for all the three work burdens, nativeand embedAnd runtimeWhile VCLUSER is isolated the burdens of work from each other in the virtual group.

Let’s start installing the supplement:

minikube -p wasm addons enable ingress

The NGINX Ingress is published in ingress-nginx Name space:

ingress is an addon maintained by Kubernetes. For any concerns contact minikube on GitHub.

You can view the list of minikube maintainers at: https://github.com/kubernetes/minikube/blob/master/OWNERS

ingress is an addon maintained by Kubernetes. For any concerns contact minikube on GitHub.

You can view the list of minikube maintainers at: https://github.com/kubernetes/minikube/blob/master/OWNERS

After the addon is enabled, please run "minikube tunnel" and your ingress resources would be available at "127.0.0.1"

After the addon is enabled, please run "minikube tunnel" and your ingress resources would be available at "127.0.0.1"

Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.4

Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.4

Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.4

Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.4

Using image registry.k8s.io/ingress-nginx/controller:v1.11.3

Using image registry.k8s.io/ingress-nginx/controller:v1.11.3

Verifying ingress addon...

Verifying ingress addon...

The 'ingress' addon is enabled

The 'ingress' addon is enabled

We must create a virtual group dedicated to publishing Pod Later.

helm upgrade --install runtime vcluster/vcluster --namespace runtime --create-namespace --values vcluster.yaml

We will determine Ingressthe ServiceAnd their relationship Pod In each virtual group. We need Vcluster to synchronize Ingress With the entry control unit. Here is the training to achieve this:

sync:

toHost:

ingresses:

enabled: true

The output should be similar to:

Release "runtime" does not exist. Installing it now.

NAME: runtime

LAST DEPLOYED: Thu Jan 30 11:53:14 2025

NAMESPACE: runtime

STATUS: deployed

REVISION: 1

TEST SUITE: None

We can adjust the above statement with Service and Ingress To expose Pod:

apiVersion: v1

kind: Service

metadata:

name: runtime

spec:

type: ClusterIP #1

ports:

- port: 3000 #1

selector:

arch: runtime

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: runtime

annotations:

nginx.ingress.kubernetes.io/use-regex: "true" #2

nginx.ingress.kubernetes.io/rewrite-target: /$2 #2

spec:

ingressClassName: nginx

rules:

- host: localhost

http:

paths:

- path: /runtime(/|$)(.*) #4

pathType: ImplementationSpecific #4

backend:

service:

name: runtime

port:

number: 3000

- expose

PodInside the block - NGINX’s illustrations to deal with the normal expression of the path and rewrite it

- Regex Path

NGINX will redirect all requests from /runtime to runtime Service, removal. To apply the statement, we first communicate with the default block that was previously created:

vcluster connect runtime

11:53:21 info Waiting for vcluster to come up...

11:53:39 done vCluster is up and running

11:53:39 info Starting background proxy container...

11:53:39 done Switched active kube context to vcluster_embed_embed_vcluster_runtime_runtime_wasm

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

Now, apply the statement:

kubectl apply -f runtime.yaml

We do the same with embed and native Century, except runtimeClassName Because they are “regular” images.

The final publishing scheme is the following:

The last touch is the tunnel to expose services:

minikube -p wasm tunnel

Tunnel successfully started

Tunnel successfully started

NOTE: Please do not close this terminal as this process must stay alive for the tunnel to be accessible ...

NOTE: Please do not close this terminal as this process must stay alive for the tunnel to be accessible ...

The service/ingress runtime-x-default-x-runtime requires privileged ports to be exposed: [80 443]

The service/ingress runtime-x-default-x-runtime requires privileged ports to be exposed: [80 443]

sudo permission will be asked for it.

sudo permission will be asked for it.

Starting tunnel for service runtime-x-default-x-runtime.

Password:

Starting tunnel for service runtime-x-default-x-runtime.

Password:

Let’s ask the lightweight container that uses the time operating time:

curl localhost/runtime/get\?foo=bar | jq

We get the expected output:

{

"flavor": "runtime",

"args": {

"foo": "bar"

},

"headers": {

"user-agent": "curl/8.7.1",

"x-forwarded-host": "localhost",

"x-request-id": "dcbdfde4715fbfc163c7c9098cbdf077",

"x-scheme": "http",

"x-forwarded-for": "10.244.0.1",

"x-forwarded-scheme": "http",

"accept": "*/*",

"x-real-ip": "10.244.0.1",

"x-forwarded-proto": "http",

"host": "localhost",

"x-forwarded-port": "80"

},

"url": "/get?foo=bar"

}

We must get similar results with other methods, with different flavor Values.

conclusion

In this post, I made it clear how webassembly was used on kubernetes with the time operation. I created three flavors for comparison purposes: nativeand embedAnd runtime. The first two are the “regular” Docker images, while the latter has only one WASM file, making it lightweight and very safe. However, we need a custom operating time to run it.

Equipment Kubernetes services do not allow additional Shim to form an additional SHIM, such as Wasmedge Shim. Even on my laptop, I had to be creative to achieve this. She had to use Minikube and made a lot of effort in forming her intermediate virtual device to run WASM work burden on kubernetes. However, I managed to play all the three images inside the default block, exposed outside the group by the NGINX Ingress Control Unit.

Now, it is up to you to report if the extra voltage is worth reducing the size of the 10x image and improving safety. I hope the future improves support to outweigh the negative positives.

The full source code for this post can be found on GitHub.

Go further than that: