How to improve collective stickers for dialogue systems

Authors:

(1) Clementia Siro, University of Amsterdam, Amsterdam, Netherlands;

(2) Mohamed Elianjadi, University of Amsterdam, Amsterdam, Netherlands;

(3) Martin de Regic, University of Amsterdam, Amsterdam, Netherlands.

Links table

Abstract and 1 introduction

2 methodology and 2.1 experimental data and tasks

2.2 Automatic generation from the various dialogue contexts

2.3 Sourdsource experiments

2.4 Experimental conditions

2.5 Participants

3 results and analysis and 3.1 data statistics

3.2 RQ1: an impact of varying quantity of the context of the dialogue

3.3 RQ2: The effect of the context of the dialogue that was automatically created

4 discussion and implications for

5 relevant work

6 Conclusion, restrictions and ethical considerations

7 thanks, appreciation and references

Appetite

extension

In this section, we offer supplementary materials used to support our main paper. These materials include: the experimental conditions shown in section A. In section a. The A.5 Section shows a complementary context created by GPT-4.

A.1 Experimental Conditions

We include the experimental conditions used in our collective experiences in Table 3.

A.2 Data quality control

User information created and summary. For the potential hallucinations of LLMS (Chang et al We automatically cross the films mentioned in both input dialogues and summaries. The summary must contain at least two thirds of the films mentioned in the input dialogue to be considered valid. If this standard is not met, the summary is eliminated, and a new mark is created after the specific claim requirements. In total, we ignored and renewed 15 dialogue summaries. To ensure more cohesion, we took 30 % random samples of the created information and needs. The authors reviewed them to confirm their cohesion and compatibility with the information provided in the input dialogue. This process strengthened the quality and reliability of the content.

Size stickers. To ensure high quality of the collected data, we have combined interest in checking the questions in the strike. The conditions were asked to determine the number of words in the dialogues they were evaluating and specifying the last film mentioned in the evaluation system response. 10 % of the visits have been rejected and returned to collect new stickers. In total, we collected 1,440 data samples from the group outsourcing mission, with six differences to obtain a link and benefit. We used the majority to vote to create a final link dialogue and interest.

A.3 claims

In Table 4, we offer the final claims used to create user information and a summary of the dial-4.

A.4 Creating Comments Instructions and Screen files

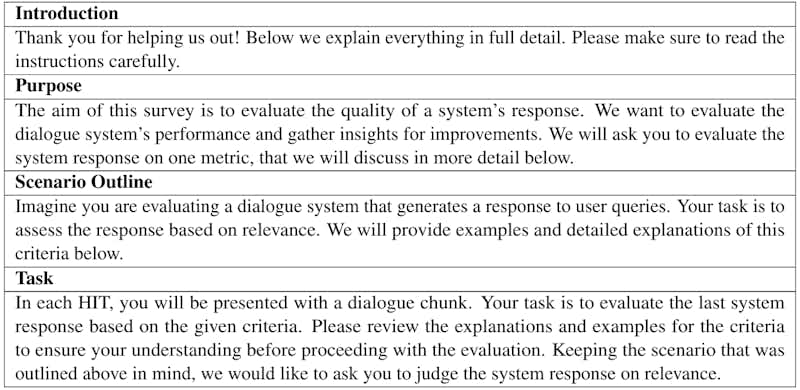

Table 5 clarifies the instructions of the explanatory comments for assessments of importance and interest. In Figure 5 and 6, the interface of the explanatory comments used for the first stage and stage 2, respectively.

A.5 The complementary context sample

In Table 6, we offer a sample of user information in need and a summary created by GPT-4.