Comparison of training time: multi -birth prediction for the next prediction

Links table

Abstract and 1. Introduction

2. The method

3. Experiences on real data

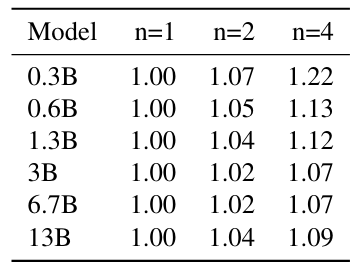

3.1. Benefit scale with the size of the model and 3.2. Faster conclusion

3.3. Learn global patterns with multi -home prediction and 3.4. Search for optimum N

3.5. Training on several ages and 3.6. Multi -weeds predictions

3.7. Annual predicts on the natural language

4. Al -Shita on artificial data and 4.1. Introductory capacity

4.2. Khwarizmi’s thinking

5. Why do you work? Some speculation and 5.1. Lookhead enhances the selection points

5.2. The argument of information theory

6. Related work

7. In sum

A. Additional results on self -decoding

for

Training speeds

D

E. Additional results on the behavior of the form of the form

Wow details of Codeconsts

G. Additional Results on Natural Language Standards

H. Additional results on summarizing the attractive text

1. Additional results on mathematical thinking in the natural language

C. Additional results on introductory learning

K. Additional results on the algorithm thinking

L. Additional intuition on the elderly prediction

M. Training Versieat

Training speeds

\

::: Information about this paper Available on Arxiv Under CC by 4.0 verb license.

:::

:::information

Authors:

(1) Fabian Glueke, Fair in Meta, Cermiics Ecole des Ponts Paristech, and contributed to an equal footing;

(2) Badr Youbi Idrissifair in Meta, Lisn Université Paris-Saclay, and he contributed equally;

(3) Babetst Roser, exhibition in Meta;

(4) David Lopez Baz, an exhibition in Meta and its latest author;

(5) Gabriel Sama, fair in Mita and the last author.

:::

\